During the Vietnam War, Harvard Business School graduate and Secretary of Defense Robert McNamara insisted that the war be fought with modern statistical methods — what would today be called “data science.” As a part of the “hamlet evaluation system,” Department of Defense officials would take every bit of information from the frontlines, quantify it, and feed it into machines that would recommend bombing targets, along with a numerical summary of how the war was progressing. These men in suits were intelligent, academically trained people, but their approach was fatally flawed. The Vietnam War was a disaster for the United States, in part because officials focused too much attention on the data and not enough on the underlying logic of their decisions.

This problem persists today. Harvard and its affiliates continue to be deeply connected to our political life, just as they were during the Vietnam War — McNamara was just one of many Harvard affiliates in the Kennedy braintrust, with another being National Security Advisor and Harvard professor McGeorge Bundy. Yet their capacity to generate present-day solutions requires a better approach than the ones they have used historically. With the invention of the computer and the ease of quantification comes the temptation to always use data in the decision-making process. However, for practical decision-making in the modern world, common-sense, heuristics, and logic still trump data.

The Known Technical Problems of Using Data

It has been said that anything that needs to put “science” in its name is not really a science. Data science does not fit alongside biology, physics, and chemistry. A number of technical problems persist, even if statisticians are aware of them, and the techniques which aim to address them are insufficient.

First, there is the “bias-variance trade off.” The more variables you put into a model, the less bias you have: all potentially relevant data is being accounted for by the model. Simultaneously, however, you increase the variance you get, meaning that small changes to the data could have large effects on the model. For example, consider a model that tries to predict a voter’s preference for a certain candidate. If you include variables like what TV shows the voter watches or what kind of soda he or she prefers, you might find a significant relationship between one of those variables and the voter’s preference, but you are also quite likely to find an erroneous relationship caused by pure randomness.

Furthermore, consider studies that achieve “statistical significance” by getting results that would only happen by chance a small percentage of the time. If you have thousands of experimenters studying the problem, a few will surely achieve statistically significant results and publish them, even if their theory is incorrect and lacks statistical support in the aggregate. Similar problems have led to a replication crisis in social science; studies said to be statistically sound have failed to produce results upon repetition. These are only a few among many well-documented problems in statistics (for another, see p-hacking).

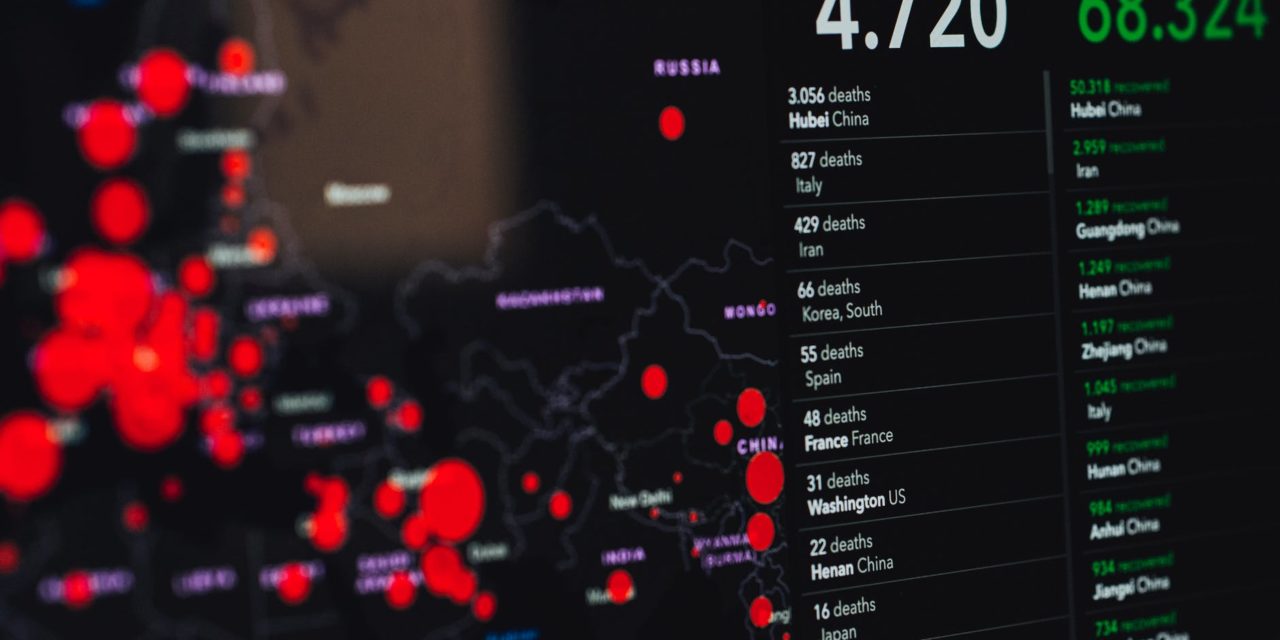

Using Logic Instead of Data During the Pandemic

The bottom line is this: if you are not a technical person, do not blindly listen to statisticians. The problem is not so much with the Harvard Statistics Department, which is aware of many of these tradeoffs and issues. The problem is when people outside of the statistics field trust the same “sophisticated” personal prediction models statisticians are producing. An example of this is a recent article published by the Harvard Business Review, “Leveraging AI to Battle this Pandemic — and the Next One,” which argued that public health officials should rely on “the technology of personalized prediction” during the COVID-19 pandemic. This would demand we trust artificial intelligence and machine learning to predict our individual preferences. Numbers sound fancy, but ask yourself: are you willing to put your health in the hands of business school professors and economists who interpret and use data so blindly?

This problem has serious consequences for our society at large. On March 31, the Surgeon General was on TV explaining that “data doesn’t show” whether wearing masks is helpful to prevent the spread of disease. By April 4, that same Surgeon General appeared in a video showing people how to make their own masks or facial coverings, and by April 17, wearing masks in public areas when social distancing cannot be observed became mandatory for all New Yorkers. During this critical time, public health officials spent too long looking at data and not enough time thinking about the actual mechanics that would allow masks and other precautions to prevent the spread of coronavirus. Good data is difficult to collect, and studies — especially those that would rely on participants’ wearing masks when study administrators were not looking — often cannot capture what is trying to be measured. Officials should have given more logical thought to the problem, rather than just following what the numbers told them.

One Solution: Heuristics

A solution to many of the ills associated with reliance on data is to use heuristics. A heuristic is defined as “a commonsense rule (or set of rules) intended to increase the probability of solving some problem.” An example you might have heard is “don’t fire until you see the whites of their eyes,” which William Prescott told his soldiers before the Battle of Bunker Hill. The idea is that while the point where you see the whites of their eyes may not be the most optimal spot to start firing (what do eyes have to do with anything?), it is a simple way to find an approximate solution. Another popular example is how our brains make the calculations necessary to catch fly balls without knowing anything about physics. Gerd Gigerenzer and Henry Brighton hypothesize that part of what makes heuristics so useful is also what makes modeling so difficult. Heuristics, by their nature, avoid problems associated with the bias-variance tradeoff described above. They focus only on a few important variables. They write that our heuristics give us an evolutionary advantage. Heuristics are at the heart of the human decision-making capability evolution gave us.

During the Vietnam War, you did not need the mountains of data to tell you that we were losing; in fact, it might have been misleading. Here is one heuristic that would have led an observer down the right path: ask five soldiers returning from the conflict how they thought the war was going. During the COVID-19 pandemic, officials should have thought about the physical properties and relative risks of using masks, rather than have waited for data to tell them what seemed obvious — that masks are an important corollary to social distancing. For example, they should have considered this asymmetry: that wearing a mask is almost costless, but the cost of not wearing a mask might have been huge if their numbers were wrong.

At Harvard, students and faculty similarly cannot trade logic for data in their research. While saying that we are making “evidence-based” or “data-driven” decisions may sound good in theory, these approaches can be disastrous in practice. Indeed, statistics as we know it today has only been around since the beginning of the 20th century, and data science as a concept is even newer. Could earlier thinkers come to sound decisions without using big data or Bayesian inference? The answer is, of course, yes. We don’t need to rely exclusively on data to come to sound conclusions; we can use our brains in the same way that all of humanity has for thousands of years.

Image Credit: Pexel / Markus Spiske